Archive for June 2015

UWP: New features of Visual State Manager (part 2)

In the previous post I told about two new features of Visual State Manager like setters and adaptive triggers and you can see that adaptive triggers are limited to screen size only. But in real applications you can change your interface based on lots of different parameters like Xbox controller availability, screen orientation, data availability etc. So, in this article I want to show how to extend existing infrastructure of adaptive triggers creating new one.

It’s hard to find an application which is not connected to Internet. But getting data from the Internet takes some time. Usually I use ProgressRing to show that getting the data is in progress and I use VisualStateManager to show or hide elements based on data availability. Usually there are three states like Loading, Loaded and Error. Of course, I needed to implement some code which change state of application based on state of “view model”. Let’s see if it’s possible to implement our own trigger which helps to avoid coding in code-behind class of my page completely.

First of all we need to check if our “view model” class is ready for triggers. It’s better to implement a property which shows current state of our model as well as an event which fires every time when model change own state. Of course, it’s better to implement base class for all view models in our application.

public enum StateEnum { Loading, Loaded, Error } public class StateChangeEventArgs:EventArgs { public StateEnum State { get; set; } } public delegate void StateChangedDelegate(object model, StateChangeEventArgs args); public class PageViewModel { public event StateChangedDelegate StateChanged; public void InitModel() { if (StateChanged != null) StateChanged.Invoke(this, new StateChangeEventArgs() { State = StateEnum.Loading }); //load data if (StateChanged != null) StateChanged.Invoke(this, new StateChangeEventArgs() { State = StateEnum.Loaded }); } }

I have implemented InitModel method as well as an example of code where we need to invoke StateChanged event. Usually you will implement this method in your own way and into inherited classes.

Once you have updated “view model” you can create object of it inside page XAML file:

<Page.Resources> <st:PageViewModel x:Name="model"></st:PageViewModel> </Page.Resources>

It’s time to create your own trigger. In order to do it you need to create a new class which inherits StateTriggerBase class. Inside the class you can declare any method and properties but you need find a place where you will call SetActive method. Thanks to this method you can activate or deactivate your trigger. For example, I implemented the following class:

public class DataTrigger: StateTriggerBase { private PageViewModel model; public PageViewModel Model { get { return model; } set { model = value; model.StateChanged += Model_StateChanged; } } public string StateOfModel { get; set; } private void Model_StateChanged(object model, StateChangeEventArgs args) { SetActive(args.State.ToString().Equals(StateOfModel)); } }

You can see that I have two properties in the class which allow to set reference to the current view model and define a state which we are going to use to activate the trigger. Once view model is initialized we will activate or deactivate the trigger using the event handler for StateChanged event.

Finally, I declared the following states:

<VisualState x:Name="Loading"> <VisualState.Setters> <Setter Target="gridView.Visibility" Value="Collapsed"></Setter> <Setter Target="progress.Visibility" Value="Visible"></Setter> </VisualState.Setters> <VisualState.StateTriggers> <st:DataTrigger Model="{StaticResource model}" StateOfModel="Loading"></st:DataTrigger> </VisualState.StateTriggers> </VisualState> <VisualState x:Name="Loaded"> <VisualState.Setters> <Setter Target="gridView.Visibility" Value="Visible"></Setter> <Setter Target="progress.Visibility" Value="Collapsed"></Setter> </VisualState.Setters> <VisualState.StateTriggers> <st:DataTrigger Model="{StaticResource model}" StateOfModel="Loaded"></st:DataTrigger> </VisualState.StateTriggers> </VisualState>

It’s really cool and allows to make better implementation of MVVM pattern.

UWP: New features of Visual State Manager (part 1)

If you are going to develop Universal Windows applications for Windows 10 you should think about adaptive interface which will successfully work on all Windows devices with different screen sizes, orientations and resolutions. As I mentioned in my previous post, Visual State Manager is a very good approach to implement adaptive interface. Visual State Managers allow to declare different states of UI with ability to change the current state in runtime. That’s why Microsoft continues to invest resources to this component and today developers can use two more features there.

Setters

If you are going to use animation to change the state you can continue to use old approach with Storyboard but in many cases animation is not needed. For example if you want to change your layout because user changed screen orientation, you need to change properties of controls very quickly. So, developers usually used ObjectAnimationUsingKeyFrame in order to make all needed changes in 0 seconds:

<Storyboard> <ObjectAnimationUsingKeyFrames Storyboard.TargetName="itemListView" Storyboard.TargetProperty="Visibility"> <DiscreteObjectKeyFrame KeyTime="0" Value="Visible"/> </ObjectAnimationUsingKeyFrames> </Storyboard>

You can see that this approach is not optimal because it requires using several complex objects with many parameters to make the simple thing. That’s why a new XAML element called Setter can be very useful. For example, you can rewrite the same thing using the Setter element:

<VisualState.Setters> <Setter Target="comboBox.Visibility" Value="Collapsed"></Setter> </VisualState.Setters>

It’s much clearer. Developers need to declare a property’s name and a new value in the selected state.

If you want you can mix Setters and Storyboard inside the same state.

Adaptive triggers

Of course it’s not enough to declare all possible states – developers need to implement code which allows to change the state dynamically. For example, if you are going to change the state based on screen size, you need to implement event handler for SizeChanged event and use GoToState method of VisualStateManager class. Sometimes it’s not clear when a state should be applied. Additionally, if you have several state groups and need to combine several states, you can easily make a mistake. That’s why Microsoft implemented an infrastructure for state triggers. It allows to declare one trigger or a set of triggers inside XAML to understand which state should be applied. So, you can declare all needed rules without coding at all.

In the current version Microsoft presented just one trigger – AdaptiveTrigger, but I hope that in release we might see some more triggers. Additionally you can develop your own triggers as well.

In the following code you can see usage of AdaptiveTrigger:

<VisualState x:Name="Normal"> <VisualState.Setters> <Setter Target="comboBox.Visibility" Value="Visible"></Setter> </VisualState.Setters> <VisualState.StateTriggers> <AdaptiveTrigger MinWindowWidth="700"></AdaptiveTrigger> </VisualState.StateTriggers> </VisualState> <VisualState x:Name="Mobile"> <VisualState.Setters> <Setter Target="comboBox.Visibility" Value="Collapsed"></Setter> </VisualState.Setters> <VisualState.StateTriggers> <AdaptiveTrigger MinWindowWidth="0"></AdaptiveTrigger> </VisualState.StateTriggers> </VisualState>

You can see that AdaptiveTrigger has only two parameters: MinWindowWidth and MinWindowHeight. These parameters allow to switch state of window based on size. In our example, if we have the width of the windows smaller than 700 pixels, we will collapse an element called comboBox.

In the next post I am going to show how to create your own trigger.

How to build your own drone (part 2)

Last time we discussed the common parts which you need to buy in order to assemble your drone. This week I have received all parts and I will show how to assemble everything and make it ready to start.

In the first step you need to assemble your frame and place some electronic components there like ESCs, motors and power distribution board. Of course, it’s easy to assemble the frame itself but in order to place all other components you need to use soldering iron before. I would not recommend to connect ESCs directly to power distribution board. In this case you will not be able to remove drone’s legs in case of transportation. Instead of that I used XT60 connectors. So, you need to solder your ESCs and connectors and use some wires to mount connectors to power distribution board as well. Don’t forget to use shrink tubes to avoid short circuit there.

In case of motors I am using banana connectors (3.5 mm) to connect motors and ESCs because you need a way to change sequence of wires there to run motors in the right way. Additionally, you can use insulating type to mount battery. If you don’t have a 3D printer in order to print some components for your frame, insulating type might help you from time to time.

In this step I recommend to connect battery to the power distribution board in order to understand that everything is OK and everything is soldered in the right way. Use multimeter to check that all ESCs produce 5V of power through control wires.

Once you assemble your frame, you need to mount fight control board and RC transmitter. Because these boards require 5V power I used power which is generated by ESCs using control wires. But each ESC contains its own power wire, so you need to remove all power wires except one. You can use knife to do this and use insulating type in order to insulate removed wires.

In the next step you can mount flight board, RC and connect them to each other. Don’t mount propellers in this step because you need to setup you flight controller before.

In the next step you can download OpenPilot software and use wizard to setup your drone. Thanks to the wizard it’s the simplest part there.

So, in the end I spent the following amount of time for each step:

· Frame assembly – 40 mins;

· Soldering (connectors, wires, motors) – 60 mins;

· Motors, ESCs and battery placement – 40 mins;

· Flight controller and RC placement – 20 mins;

· Setup – 10 mins;

Finally, my drone is ready to fly.

How to build your own drone (part 1)

During the Maker Faire event I demonstrated my own drone based on Netduino. And I got lots of questions about hardware but not quite as many about software. Several people even told me that they tried to assemble own drone but failed. That’s why I decided to show my experience there. I am going to have vacation this week, so, I put aside software development and new APIs for some time and have some fun trying to assemble a new drone.

I am going to develop a powerful outdoor drone. So, it should be heavy enough to fly stable despite the wind and at the same time it should have enough power to fly up as well.

Let’s start with components which you need to buy.

Pay attention that in some cases it requires some time to receive it by mail. Usually I use robotshop.ca – if they have something you can get it in 2-3 days but it’s a little bit expensive compared to amazon and they only have a few components in each category. If I am ready to wait some time I use amazon.ca or amazon.com – the second one has many more components and 2-3 days delivery option for some of them but you will need visit the US to get your package.

First of all you need to decide which type of drone you are going to build and select the right frame. I would recommend to start with a quadcopter but during my vacation I will build a hexacopter. It’s better to select a metal (aluminum) frame because it’s not easy to break this frame. Of course, you can build your own frame using wood or metal parts but you spend much more time and lots of money compared to exiting frames which starts from $17. I have tested two frames: X525 and 650X6. They are pretty good and not very expensive.

Once you know number of motors based on your frame you can select motors. For drone, you need to buy brushless motors which can run you propellers with different speeds. Additionally you need to buy a special control board for each motor called ESC. Thanks to ESC you can run your motors with different speed and motors have as much energy as needed. Different brushless motors require different ESC (based on voltage), so you need to read specification for motors before to buy ESCs. For the frames which I mentioned early I would recommend to buy 1000KV motors like A2212. You even can find pack of similar motors with ESC and propellers. I like this pack and usually I buy it.

Motors and ESCs is the most expensive part of the drone but the second one is battery pack. If you want to fly 20-30 minutes you need to buy a good LiPo battery and you need to buy a charger as well. I bought a 5100 mAh battery but you can buy anything from 2200 mAh. Just verify that it produces 11.1 Volts of power – it guarantees that you will have enough power for your motors and control board. Charger is usually expensive as well but you need a way to power your battery.

In order to operate your drone you need to buy radio control receiver and transmitter. I have FlySky RC but you can buy anything. In general you can fly using 4 channels only but you can use other channels for camera or something like this. Pay special attention that from time to time you can find some receivers which don’t work with common transmitters. So it’s better to buy both things together from the same company. Of course, you can use WiFi modules to operate drones from your phone or laptop but in this case your drone will not fly far away.

The most important part of your drone is the control board which sends signals to your motors, receives signal from your RC and implements some algorithms for stabilizing your drone. Usually this board contains several sensors like accelerometer and gyroscope but you can add barometer and GPS as well. For the first drone it’s enough to have just Accelerometer and gyro. I would recommend OpenPilot board because that software supports wizard which helps setup your drone, and drones based on OpenPilot are very stable. I used MultiWii boards as well but you need spend much time in order to setup those boards, and I encountered some problems with algorithms there. You can use Arduino, Netduino and even Raspberry and implement your own algorithms but it requires additional time and good math knowledge.

Finally, you need to buy some components which help to solder the frame like soldering iron, iron wires, connectors, power distribution board. Once you have all these things you are ready to start assembling your drone.

Next time I will show how to assemble my hexacopter step by step but at the end of the post I want to share list of expenses for my drone:

· Frame – $38

· Brushless motors (two packs, because I need at least 6 motors) – $146

· Power distribution board – $7

· Wires – $10

· Shrink Tubes – $3

· Banana connectors – $5

· Connectors – $8

· Battery – $38

· Charging station – $23 (probably, it’s better to buy more expensive charger in order to avoid big boom at your house)

· RC – $100

· Flight control board – $19

· Soldering iron and some stuff there – $30

So, total price of my drone is about $427 but there are still opportunities to cut down the price. For example you can buy cheaper RC, battery etc. At the same time you can increase the price adding camera, GPS, barometer etc.

Microsoft Canada at Vancouver Mini Maker Faire

Last weekend I got a chance to participate in Vancouver Mini Maker Faire event. I still don’t understand why it’s called “Mini” because the exhibition filled all the space at PNE Forum including outdoor exposition. And, of course, we had our own booth there as well. So, what was Microsoft doing there?

Half of our exposition demonstrated ability of Visual Studio to support IoT projects for different boards like Arduino, Netduino, Raspberry Pi 2 etc. I have developed two rovers based on EZ-B controller and based on Arduino. In case of EZ-Robot I used UniversalBot project for developing Windows 10 applications. For Arduino I used the instructions which you can find on windowsondevices.com but you can use Visual Studio to develop and deploy Arduino applications directly using Visual Micro plug-in. Additionally I have developed the drone based on Netduino board, so I used .NET Micro Framework and C# to develop all stabilization algorithms there.

The second part of our exposition was around Kinect and 3D printing. Thanks to 3D printing support in Windows you can develop your own applications very quickly without thinking about how to cut the model in slices or generate G-code. Additionally, you can use existing 3D printing software like 3D Builder to create own models and print it.

Additionally, 3D Builder supports Kinect devices to 3D scan of existing objects.

Of course, we used Kinect not just for 3D scanning but for entertainment as well. All attendees have a chance to dance in our Xbox One area where we used Kinect as well and installed Just Dance Kinect ready game.

I hope that everybody had some fun visiting out booth as well as all other expositions there.

UWP: Speech Recognition (part 2)

So, we already know how to transform text to speech and it’s time to talk about the opposite task.

Universal Application Platform supports Windows.Media.SpeechRecognition namespace and several ways to recognize your speech. You can predefine your own grammar, use existing one or use grammar for web search. In any case you will use the SpeechRecognizer class. Let’s see how to use this class in different scenarios.

Like the SpeechSynthesizer class, SpeechRecognizer has some static properties which allow to understand available languages for recognition. The first property is SystemSpeechLanguage which shows system language and it should be the default language as well. The next properties SupportedTopicLanguages and SupportedGrammarLanguages are not very clear because in case of Text to Speech classes we have just one property for all supported languages. But SpeechRecognizer allows to recognize your speech locally or use several dictionaries online. That’s why SpeechRecognizer has two properties: SupportedGrammarLanguages – for general offline tasks and SupportedTopicLanguages – for online grammars.

Let’s start with showing how to use SpeechRecognizer objects in several ways but first of all you need to declare capability in manifest of your application which will allow you to use recognizer. UAP doesn’t have any special capabilities there like Windows Phone 8.1, so you need just to declare microphone capability. So, usually, you manifest will look like this:

<Capabilities> <Capability Name="internetClient" /> <DeviceCapability Name="microphone" /> </Capabilities>

Of course it’s not enough and you need to implement additional actions to make sure that user grants permissions to your application. In order to do it you can implement the following code:

bool permissionGained = await AudioCapturePermissions.RequestMicrophonePermission(); if (!permissionGained) { //ask user to modify settings }

In Windows 10 user can disable microphone permissions for selected applications or for all applications at once. You can easily find the window which allows to do it (Settings->Privacy->Microphone).

If everything is OK with permissions you can start executing some methods which implement speech recognition logic.

Based on your scenario you can implement the following approaches for speech recognition:

· Predefined grammars – in this case recognizer uses online grammars. So, you should not create your own and there are two opportunities: you can use general grammar or grammar which is based on the most popular web search queries. Therefore, using the first grammar you will able to recognize any text but the second one is optimized for search;

· Programmatic list constrains – this approach allows to create list of strings with particular words or phrases which user can use when speaking. It’s better to use this approach then your application has predefined list of commands. Additionally, you can manage the list in runtime depends on context in your application;

· SRGS grammar – thanks to SRGS language you can create XML document with grammar inside. It allows to create more flexible applications without hardcoded grammar inside;

Despite of selected approach you need to implement the following steps:

· Create an object of SpeechRecognizer class. It’s the simplest step and doesn’t require any special knowledge;

· Prepare your dictionary. In order to do it you need to create an object of a class which implements ISpeechRecognitionConstraint. There are for constraint classes but in this post I am going to talk about three of them: SpeechRecognitionGrammarFileConstraint, SpeechRecognitionListConstraint and SpeechRecognitionTopicConstraint. The first one allows to create grammar based on file. You can just create StorageFile object and pass it as the parameter. The second one allows to use programmatic list like your grammar and the last one supports predefined grammars;

· Once you create a constraint (or constraints) you can add it to Constrains collection of SpeechRecognizer object and call CompileConstraintsAsync method in order to finish all preparations. If you don’t make any errors in your constraints, the method will return Success status and you can go ahead;

· In the next step you can start recognition and there are several options as well: you can start recognition of your commands using RecognizeAsync method of SpeechRecognizer objector you can use ContinuousRecognitionSession property there and call StartAsync method. The first one method allows to recognize short commands and using predefined settings but the second one is adopted for continues recognition of free dictation text. Of course, using RecognizeAsync you can get results in place but using StartAsync method you need to use event handlers for ContinuousRecognitionSession.Completed and ContinuousRecognitionSession.ResultGenerated events;

Additionally, you can use the set of methods which allows to utilize built-in dialog panels for speech recognition – just use RecognizeWithUIAsync method.

If you want to find some examples of speech recognition I would recommend to use the following link. You can find speechandtts example there. Next time I am going to cover more interesting topics related to Cortana.

Raspberry PI 2 and analog input

Compare to Arduino, Raspberry PI 2 doesn’t have analog pins and even PWM pins. If you check IoT extension for Universal Windows Platform you will discover three sets of classes: I2C, SPI and GPIO. The last one allows to use Raspberry GPIO for sending/receiving high or low voltage only. So, if you want to create a drone or cool robot based on Raspberry Pi 2 and powered by Windows 10, you need to answer the following questions:

· How to read analog signals;

· How to emulate PWM (pulse width modulation);

· How to read digital signals from various digital sensors (if these signals are not LOW or HIGH);

In this post I am going to answer the first question.

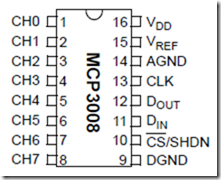

Because GPIO of Raspberry doesn’t have any PWM features we need to use external convertors which help us to transform analog signal to digital. I would recommend to use convertors from Microchip Technology and there is the great selection of different chips: MCP3002, MCP3008, MCP3208 etc. I bought MCP3008 because it supports up to 8 channels and represent analog data in 10 bits format. Because Arduino and Netduino use the same format (from 0 to 1024) I am used to using 10 bits.

MCP3008 works based on SPI bus, so we should use SPI bus on Raspberry. In order to do it I connected CLK leg of the chip to pin SPI0 CSLK (19), D(out) leg to SPI0 MISO pin (21), D(in) leg to SPI0 MOSI (23) and CS/SHDN to SPI0 CS0 (24). V(dd) and V(ref) legs I connected to power and DGND to the ground.

I have analog photoresistor sensor only. So, I used just channel 0 and connect signal leg of the sensor to CH0.

Below you can find my code which you can copy and paste to MainPage.xaml.cs of your Universal application. I didn’t make any interface and just used Debug class to print sensor’s data to Output window:

byte[] readBuffer = new byte[3]; byte[] writeBuffer = new byte[3] { 0x06, 0x00, 0x00 }; private SpiDevice spi; private DispatcherTimer timer; private void Timer_Tick(object sender, object e) { spi.TransferFullDuplex(writeBuffer, readBuffer); int result = readBuffer[1] & 0x07; result <<= 8; result += readBuffer[2]; result >>= 1; Debug.WriteLine(result.ToString()); } protected async override void OnNavigatedTo(NavigationEventArgs e) { await StartSPI(); this.timer = new DispatcherTimer(); this.timer.Interval = TimeSpan.FromMilliseconds(500); this.timer.Tick += Timer_Tick; this.timer.Start(); base.OnNavigatedTo(e); } private async Task StartSPI() { try { var settings = new SpiConnectionSettings(0); settings.ClockFrequency = 5000000; settings.Mode = SpiMode.Mode0; string spiAqs = SpiDevice.GetDeviceSelector("SPI0"); var deviceInfo = await DeviceInformation.FindAllAsync(spiAqs); spi = await SpiDevice.FromIdAsync(deviceInfo[0].Id, settings); } catch (Exception ex) { throw new Exception("SPI Initialization Failed", ex); } }

Windows 10: How to use IoT extension for Raspberry Pi 2 (part 2)

In the previous post we discussed how to add extensions to Universal projects and made a short overview to GPIO classes. Today I am going to continue the overview of the IoT extension and we will discuss I2C hub and classes there.

Last week I received MPU6050 sensor which provides data from gyroscope and accelerometer and is very useful for people who would like to build own drones and helicopters. You can find many different boards in the market including just 6050 chip. In my case I bought this one from http://robotshop.ca.

This sensor doesn’t require soldering and it contains 5V to 3.3V convertor (6050 chip uses 3.3V), which allows to use power from ESC or other boards with 5V power pins.

MPU 6050 uses I2C hub to communicate. So, to get the sensor ready to work you need to connect 5V and GND pins on Raspberry to VCC and GND on the sensor. Additionally you need to connect SDA and SCL pins there. This sensor doesn’t have any leds and it’s not easy to understand if everything is OK. So, the simplest way to check if it works is to start developing something.

All needed classes you can find in Windows.Devices.I2c namespace and the first one is I2cDevice. Each device, which you connect using I2C hub, should be associated with object of I2cDevice class and thanks to this object developers can communicate to device. The most common methods there are Read and Write which are working with array of bytes in order to receive or send data. But in many cases you need to send data to device to ask something and read response from there. In order to avoid calling of two methods, I2CDevice class supports WriteRead method. This method has two parameters as arrays of bytes. The first array contains data which you are going to send to device and the second one – buffer for data from device.

Thanks to I2cDevice, it’s easy to communicate with devices but in order to get reference to the I2cDevice object you need to accomplish several tasks.

First of all you need to get reference to I2C device on the board (not your sensor but existing I2C pins). Microsoft uses the same approach as for all other devices like Bluetooth, WiFi etc. You need to use friendly name to create query string for the device and try to find the device on the board. GetdeviceSelector method of I2cDevice class allows to create the query string and you should use I2C1 friendly name there. In order to find information about the existing device you should use FindAllAsync method of DeviceInformation class. This method returns information about available I2C device and you can use this information in order to create I2cDevice object. Next step you need to create connection string for your sensor. It’s easy to do using I2cConnectionString class passing address of the sensor to the constructor of the class. Once you have information about I2C on your board and connection string for external device/sensor you can create I2cDevice object using FromIdAsync method.

So, for my MPU 6050 I created the following code:

class MPU6050 { //I2C address private const byte MPUAddress = 0xD2>>1; private I2cDevice mpu5060device; public async Task BeginAsync() { string advanced_query_syntax = I2cDevice.GetDeviceSelector("I2C1"); DeviceInformationCollection device_information_collection = await DeviceInformation.FindAllAsync(advanced_query_syntax); string deviceId = device_information_collection[0].Id; I2cConnectionSettings mpu_connection = new I2cConnectionSettings(MPUAddress); mpu_connection.BusSpeed = I2cBusSpeed.FastMode; mpu_connection.SharingMode = I2cSharingMode.Shared; mpu5060device = await I2cDevice.FromIdAsync(deviceId, mpu_connection); mpuInit(); } }

mpuInit method there is sending initial values to the sensor and I will describe it below. MPUAddress should be 0xD2 according to documentation but we need to take just 7 bits of this value so I moved the value to one bit right.

Once we have I2cDevice object we can start to work with the device. It’s not so easy because MPU 6050 has lots of registers and you need to understand most of them. Additionally, you need to initialize the sensor to get values using needed scale range etc. Let’s see several registers there:

· 0x6B – power management. It’s possible to setup different settings related to power mode but the most important bit there is bit number seven. Thanks to this bit you can set the sensor to initial state;

· 0x3B – 0x40 – accelerometer data. There are 6 bytes which contain data for x, y and z axis. Because two bytes are required to present the data per each axis there are 6 bytes (not 3). So, to form result you need to use the first byte as a high part of short (int16) and the second one – as a low byte;

· 0x41 – 0x42 – two bytes which represent temperature there – high and low byte;

· 0x43 – 0x48 – 6 bytes for gyroscope data (as for accelerometer);

So, you can use mpuInit method to setup initial state of the sensor. For example, you can resent the sensor using the following command:

mpu5060device.Write(new byte[] { 0x6B, 0x80 });

In order to measure something you can use WriteRead method. I don’t want to create much code in this post, so I want to show how to measure temperature only. You can use the following code:

byte mpuRegRead(byte regAddr) { byte[] data=new byte[1]; mpu5060device.WriteRead(new byte[] { regAddr },data); return data[0]; } public double mpuGetTemperature() { double temperature; short calc = 0; byte []data = new byte[2]; data[0] = mpuRegRead(MPU_REG_TEMP_OUT_H);//0x41 data[1] = mpuRegRead(MPU_REG_TEMP_OUT_L);//0x42 calc = (short)((data[0] << 8) | data[1]); temperature = (calc / 340.0) + 36.53; return temperature; }

Later I am going to publish my MPU6050 class on GitHub as well as some additional classes which you can use from C# in order to work with accelerometer and other sensor.