Archive for November 20th, 2014

Kinect 2 and Unity 3D: How to…

In one of my earlier posts I already told about my first experience in Kinect and Unity integration. But at that time I was able to download only the beta version of the package, which didn’t support Face, Face HD and Fusion APIs there. Today, we have access to a release version of Kinect for Windows SDK 2.0 as well as access to Unity Pro packages and if you want to download them right now, you can find all needed links here http://www.microsoft.com/en-us/kinectforwindows/develop/downloads-docs.aspx.

Of course, you still need a Unity Pro for Kinect functionality but if you want to test some features right now, it’s easy to activate 30-days trial version of Unity Pro. It should be enough in order to understand some features of the Kinect as well as to decide if you want to start a business right now.

So, if you already downloaded the Unity Pro package, you could notice that it contains three packages inside (.unitypackage files). The first file Kinect.2.0.1410.19000.unitypackage contains base functionality of Kinect SDK for Unity. It will allow to track bodies, leans, colors and so on. But if you want to use functionality, which relates to face (emotions, face HD tracking etc.) you will need the second package – Kinect.Face.2.0.1410.19000.unitypackage. The last package contains API which will help to use data from Visual Gesture Builder in order to simplify a way to understand predefined gestures.

Before I start to create some code, I want to drag your attention to the hardware part. In order to start working with Kinect 2 SDK you need a Kinect 2 sensor. I know that the last sentence looks stupid but it’s realityJ I just found in Microsoft Store that Kinect 2 for Windows costs around 200 dollars – it’s very expensive, if you just want to test something. But Microsoft announced a solution of this problem as well. If you have Kinect for Xbox One, you will able to buy a special Kinect adapter for Windows, which will allow you to connect existing sensor to PC. The adapter costs around 50 dollars, which is much cheaper than a new Kinect sensor. Because I already have Xbox One, I decided to buy adapter only.

The adapter has a pretty big box, because it’s not just connector to USB. In general, Kinect requires more power than USB can provide, so the adapter allows to connect Kinect to power as well.

Because we already discussed some hardware questions I want to point to some additional requirements there. In order to build something with Kinect you will need to have Windows 8 (x64) operation system, USB 3.0 host controller and DirectX 11 capable graphics adapter.

Finally, we finished with hardware, so let’s create some code.

Let’s start with simple example where we will make some manipulation with cube using the base API. In order to do it I created a new Unity Pro project and imported Kinect.2.0.1410.19000.unitypackage there. Let’s put a cube in front of camera and create a script, which should be associated with the cube. We are going to do all work inside that script.

First of all we need to create some data fields in our class:

private KinectSensor _Sensor;

private BodyFrameReader _Reader;

private Body[] _Data = null;

We are going to use KinectSensor in order to access to Kinect. KinectSensor class provides some properties, which allows us to get sources’ references. Kinect supports several sources:

· BodyFrameSource – provides basic information about tracked people (up to 6) like skeleton information, leans, hand states etc.;

· AudioSource – allows to track a sound source from a specific direction;

· BodyIndexFrameSource – shows if a particular pixel relates to body or to background;

· ColorFrameSource – gets a copy of video image, which Kinect got from the camera;

· DepthFrameSource – each pixel of this frame represents a distance between Kinect and tracked objects (up to 4.5 meters);

· InfraredFrameSource – supports black and white frame, which looks good with any sources of lights;

· LongExposureInfraredFrameSource – looks like infrared frame but it supports better quality with less noise. It requires a longer period of time in order to get data;

All of these properties support OpenReader method, which returns the appropriate reader. KinectSensor also supports OpenMultiSourceFrameReader, which allows to get data from several readers just using a single line of code.

Since we need to know basic body movements only, we will use just BodyFrameReader. Additionally, we need an array of Body class in order to store current information about the body.

Right now we are ready to write some initialization methods. Unity supports Start method for MonoBehaviour classes in order to provide a place for initialization. We will use this method to prepare out Kinect and reader.

void Start ()

{

_Sensor = KinectSensor.GetDefault();

if (_Sensor != null)

{

_Reader = _Sensor.BodyFrameSource.OpenReader();

if (!_Sensor.IsOpen)

{

_Sensor.Open();

}

}

}

In the previous post about Kinect I created bad code and forgot to dispose my objects. It worked fine there but if you make the same mistake here you will be able to get data from Kinect just for the first launch of your game inside Unity. After it you will not able to get any data as well as exceptions or something like it. So, in order to avoid having to restart Unity every time when you launch your application inside, we should include OnApplicationQuit method as well. We will call Dispose method for our objects there.

void OnApplicationQuit()

{

if (_Reader != null)

{

_Reader.Dispose();

_Reader = null;

}

if (_Sensor != null)

{

if (_Sensor.IsOpen)

{

_Sensor.Close();

}

_Sensor = null;

}

}

Right now we are ready to implement Update method, which will be called on each frame update. This method will contain less Kinect related code. We need to get last frame using AcquireLatestFrame method and, in case if frame exists, we need to initialize Body array. Because Kinect supports up to 6 bodies, we need to create array based on this number but BodyFrameSource supports BodyCount property as well.

Please, don’t forget to dispose frame right after initialization of Body array.

Additionally, we should understand if at least one body was tracked. In order to do it we may use IsTracked property in Body class. If at least one body is tracked we will use its index in order to start moving our cube. Here is my version of the Update method:

void Update()

{

if (_Reader != null)

{

var frame = _Reader.AcquireLatestFrame();

if (frame != null)

{

if (_Data == null)

{

_Data = new Body[_Sensor.BodyFrameSource.BodyCount];

}

frame.GetAndRefreshBodyData(_Data);

frame.Dispose();

frame = null;

int idx = -1;

for (int i = 0; i < _Sensor.BodyFrameSource.BodyCount; i++)

{

if (_Data[i].IsTracked)

{

idx = i;

}

}

if (idx>-1)

{

if (_Data[idx].HandRightState != HandState.Closed)

{

horizontal =

(float)(_Data[idx].Joints[JointType.HandRight].Position.X

* 0.1);

vertical =

(float)(_Data[idx].Joints[JointType.HandRight].Position.Y

* 0.1);

if (firstdeep == -1)

{

firstdeep =

(float)(_Data[idx].Joints[JointType.HandRight].Position.Z

* 0.1);

}

deep =

(float)(_Data[idx].Joints[JointType.HandRight].Position.Z

* 0.1) - firstdeep;

this.gameObject.transform.position = new Vector3(

this.gameObject.transform.position.x + horizontal,

this.gameObject.transform.position.y + vertical,

this.transform.position.z + deep);

}

if (_Data[idx].HandLeftState != HandState.Closed)

{

angley =

(float)(_Data[idx].Joints[JointType.HandLeft].Position.X );

anglex =

(float)(_Data[idx].Joints[JointType.HandLeft].Position.Y);

anglez =

(float)(_Data[idx].Joints[JointType.HandLeft].Position.Z);

this.gameObject.transform.rotation =

Quaternion.Euler(

this.gameObject.transform.rotation.x+anglex * 100,

this.gameObject.transform.rotation.y+angley * 100,

this.gameObject.transform.rotation.z+anglez * 100);

}

}

}

}

}

As you can see, I used HandLeftState and HandRightState in order to change properties of cube. User will be able to “close” his hand in order to avoid cube movement. In order to use z axis I initialize deep variable in current position of user’s hand by z because the axis shows distance between Kinect and user’s hand. But thanks to that initialization I am able to move cube backward or forward by z.

Next time we will create more advanced examples based on DepthFrameSource and AudioSource.

Azure Mobile Services: Creating a Universal Application

Windows Notification Service and Windows Runtime

It’s time to start developing a real application, which will have a user-friendly interface, implement several types of notifications and be ready for the Store. I don’t have experience in Android and iOS development, so I will try to develop just Windows Phone and Windows 8 applications and I will publish them to the Microsoft Store.

In the previous posts, we developed a Windows Phone (Silverlight) application without a specific interface and we used Microsoft Push Notification Service (MPNS) in anonymous mode, which is good for testing only. Today we are going to use Windows Notification Service (WNS), which is supported by Windows Runtime.

Windows Runtime was introduced almost three years ago as a platform for Windows Store applications with Modern User Interface (Modern UI). It was presented as a new, native, object-oriented API for Windows 8, which helps to develop touch-enabled applications for ARM, x86 and x64 devices. At that time Windows Phone supported Silverlight only for business applications and developers had to develop different types of applications for Windows 8 and Windows Phone. Therefore, developers were waiting for Windows Runtime implementation for Windows Phone, and it was announced this year for Windows Phone 8.1. So, today, developers can share code between WP and Windows applications and use many of their features in the common way. One of these features is Windows Notification Service, which was shared between two platforms and substituted MPNS in WP.

In this post, we will use Universal Apps template to develop both types of applications and we will share as much code as possible. We will use WNS in order to send three types of notifications.

Before we will start coding, we should configure Windows Application Credentials in our Mobile Service (Push tab) but it requires Store registration. So, if you don’t have a Store account yet it’s right time to create it. In order to make an account you may visit the following site https://devcenterbenefits.windows.com/ and you will be able to get free access to Dev Center dashboard.

If you have an account in the Store, you should go to dashboard and try to submit a new Windows Store application (I know that we haven’t developed it yet). During the submission process, you will reserve application name and provide selling details. But we need the next step, which will help to generate client secret key for our application. Let’s click Live Service site link there.

You will be redirected to App Settings page, where you can find a Package SID, Client ID and Client secret.

This information is required to send notifications to registered devices. So, copy these keys and put them to identity tab of Media Services.

Additionally, I want to make several remarks here.

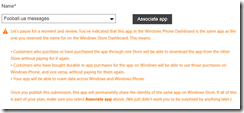

First of all, today we have a chance to associate our Windows Phone and Windows 8 applications. In this case they will use the same notification identities as well as many other features. In order to create the association, you need to visit Windows Phone dashboard and try to submit a new application. In the first step you will be able to select the name, which you already reserved in Windows Store. You should click “Associate app” button in order to make association between our Windows 8 and Windows Phone applications.

Right after you have reserved names for your applications in Windows Store and Windows Phone Store, you may download all needed information to your Visual Studio project as well. If you forget to do it, you will not be able to test your applications because they will not be able to receive notifications due to wrong package identity. In order to do it you may use the context menu for each of our projects.

Server-side for Windows Runtime application

We finished configuring Media Services but in order to enable server-side messages to registered devices we need to modify our Insert trigger. In the previous article, we implemented a simple JavaScript trigger, which was able to send notifications through MPNS. Let’s substitute MPNS to WNS. Additionally, I am going to add more notifications types like RAW and Tile notifications. RAW notifications allow to receive notifications when an application is active and it allows to update content of the application in real time. Tile notifications are used for application Tile update.

Below you can find code, which allows to send Toast notifications, Tile notifications we will add later.

function insert(item, user, request)

{

request.execute(

{

success: function()

{

push.wns.sendToastText02(null,

{

text1:"Football.ua",

text2:item.text

},

{

success: function (pushResponse)

{

console.log("Sent push:", pushResponse);

}

});

request.respond();

}

}

);

}

When we used MPNS, we called just sendToast method but Windows 8.1 as well as Windows Phone 8.1 allow different presentations of Toast and Tile notifications. In this application, I decided to use ToastText02 template, which will show text1 as a title of notification (single line) and text2 as a body of notification (two lines on the screen). You can use any other format for your applications, which can be found here.

Interface of our application

Right now, we are ready to create our client application. At the first step, we should allow Toast capabilities in our application. We can make it in the manifest file of the application (Package.appmanifest). In order to do it you can use manifest designer (just click the manifest) but you should be very careful because Universal Apps template will not allow to create a single application for both platforms. Instead you will have two projects as well as two applications. That’s why you should make your modifications inside each manifest (inside both projects). Just click the application tab and set Toast capable to Yes.

Windows 8 and Windows Phone 8.1 don’t require to create own settings page in order to allow user to switch off/on notifications. Windows 8 will generate off/on option inside Settings charm and Windows Phone supports “notifications+actions” page under Settings menu.

On the next stage, we should add Windows Azure Mobile Services package using NuGet manager. We already did it in the previous posts. In this case, you should do it for both projects. Finally, we are ready to write some code.

We will start with code, which will request a notification channel for our device and will send the channel to our Mobile Services. Because you need to do it every time during the application launch, we will use App.xaml.cs file. By default, this file is shared between Windows Phone and Windows applications. We will not change this behavior because WP and Windows 8 application have the same application model and we will have shared code there.

In order to create a notification channel we will use PushNotificationChannel and PushNotificationChannelManager classes. MSDN says that we should store our notification channel after each request and compare a new channel to the old one in order to avoid sending duplicate to our server-side. However, I decided to avoid this practice. We will get notification channel for our application on every launch event and we will send it to MobileServices at once. We should understand that there might be problem with Internet, so, we will check possible exceptions related to it and, in case of an exception, we will continue to launch our application without any notifications or something else. This type of behavior is justified because we will upload old data on the next stage. So, we will have a chance to notify a user about problem with network. In any case, we cannot use notification channel as a guaranteed way for delivering our messages.

I propose the following method for receiving a notification channel and for registering it in Mobile Services:

private async void CreateNotificationChannel()

{

try

{

PushNotificationChannel channel;

channel = await PushNotificationChannelManager.

CreatePushNotificationChannelForApplicationAsync();

MobileServiceClient mobileService =

new MobileServiceClient("https://test-ms-sbaydach.azure-mobile.net/");

await mobileService.GetPush().RegisterNativeAsync(channel.Uri);

}

catch

{

}

}

Pay special attention that we don’t use application key on client side. So, you need to modify permission for registration script accordingly.

Right now, we can put the method call to the beginning of OnLaunched event handler and it will allow us to receive Toast notifications at once.

It’s time to implement business logic of our application but I leave it to the next post.